Making media objects with Avendish

This book is two things: a tutorial for the usage of Avendish, intertwined with an explanation of the concepts and implementation.

Avendish aims to enable media objects / processors authors to write the processor in the most natural way possible, and then to map this to host softwares or languages with so-called zero-cost abstraction.

By zero-cost abstraction, we mean zero run-time cost. However, we'll see that the system actually enables extremely short compile times compared to the norm in C++.

The library is borne from:

-

The necessity to reduce duplication among media processors in C++.

-

Allowing to express the quintessence of a given media processor.

- There should be no compromise at any point: the user of the library must be able to declare all the properties, inputs, outputs of a media processor, whether it processes audio, video, MIDI, asynchronous messages, etc...

- UIs must be possible.

- GPU-based processors must be possible.

- The way this is expressed should be the simplest possible way in C++, in the sense that the code should be as natural as possible: just declaring variables should be sufficient. The lack of proper reflection in C++ still limits this in a way, but we will see that it is already possible to get quite far !

- For a given processor, multiple potential expressions are possible. We aim to capture most possible expressions: for instance, it may be more natural for a given audio processor to implement it in a sample-wise way (each audio sample processed one-by-one), and for another to be implemented through buffer-wise processing.

-

The observation that the implementation of a media processor has no reason of depending on any kind of binding library: those are two entirely orthogonal concerns. Yet, due to how the language works, for the longest time writing such a processor necessarily embedded it inside some kind of run-time framework: JUCE, DPF, iPlug, etc. These frameworks are all great, but also all make compromises in terms of what is possible to express. Data types will be limited to a known list, UI will have to be written with a specific UI framework, etc. In contrast, Avendish processors are much more open ; processors can be written in their "canonic" form. The various bindings will then try to map as much as is possible to the environments they are bound to.

In addition, such frameworks are generally not suitable for embedded platforms such as micro-controllers, etc. JUCE does not work on ESP32 :-)

In contrast, Avendish processors can be written in a way that does not depend on any existing library, not even the standard C or C++ libraries, which makes them trivially portable to such platforms. The only required thing is a C++ compiler, really!

Note that due to limited funding and time of the developer, not all backends support all features. In some cases this is because the backend has no way to implement the feature at all (for instance, a string output port in a VST plug-in), in other cases just because by lack of time, but it's on the roadmap! In general features are prototyped implemented in the ossia score back-end first. Then they are migrated to the others back-end when the feature has proven useful and the API has been tested with a few different plug-ins and use-cases.

Why C++

To ease porting of most effects, which are also in C++. Step by step, we will be able to lift them towards maybe higher-level descriptions, but first I believe that having something in C++ is important to allow capturing the semantics of the vast majority of media processors in existence.

Also because this is the language I know best =p

Non-C++ alternatives exist: Faust and SOUL are the two most known and are great inspirations for Avendish ; they focus however mainly on audio processing. Avendish can be used to make purely message-based processors for e.g. Max/MSP and PureData, Python objects, etc. or video processing objects (currently implemented only for ossia score, but could easily be ported to e.g. Jitter for Max, GEM for PureData, etc.).

What is really Avendish

- An ontology for media objects.

- An automated binding of a part of the C++ object semantics to other languages and run-time environments.

- An example implementation of this until C++ gets proper reflection and code generation features.

- Very, very, very, very uncompromising on its goals.

- Lots of fun C++20 code !

GIMME CODE

Here's an example of a complete audio processor which uses an optional library of helper types: if one wants, the exact same thing can be written without any macro or pre-existing type ; it is just a tad more verbose.

struct MyProcessor {

// Define generic metadata

halp_meta(name, "Gain");

halp_meta(author, "Professional DSP Development, Ltd.");

halp_meta(uuid, "3183d03e-9228-4d50-98e0-e7601dd16a2e");

// Define the inputs of our processor

struct ins {

halp::dynamic_audio_bus<"Input", double> audio;

halp::knob_f32<"Gain", halp::range{.min = 0., .max = 1.}> gain;

} inputs;

// Define the outputs of our processor

struct outs {

halp::dynamic_audio_bus<"Output", double> audio;

halp::hbargraph_f32<"Measure", halp::range{-1., 1., 0.}> measure;

} outputs;

// Define an optional UI layout

struct ui {

using enum halp::colors;

using enum halp::layouts;

halp_meta(name, "Main")

halp_meta(layout, hbox)

halp_meta(background, mid)

struct {

halp_meta(name, "Widget")

halp_meta(layout, vbox)

halp_meta(background, dark)

const char* label = "Hello !";

halp::item<&ins::gain> widget;

const char* label2 = "Gain control!";

} widgets;

halp::spacing spc{.width = 20, .height = 20};

halp::item<&outs::measure> widget2;

};

// Our process function

void operator()(int N) {

auto& in = inputs.audio;

auto& out = outputs.audio;

const double gain = inputs.gain;

double measure = 0.;

for (int i = 0; i < in.channels; i++)

{

for (int j = 0; j < N; j++)

{

out[i][j] = gain * in[i][j];

measure += std::abs(out[i][j]);

}

}

if(N > 0 && in.channels > 0)

outputs.measure = measure / (N * in.channels);

}

};

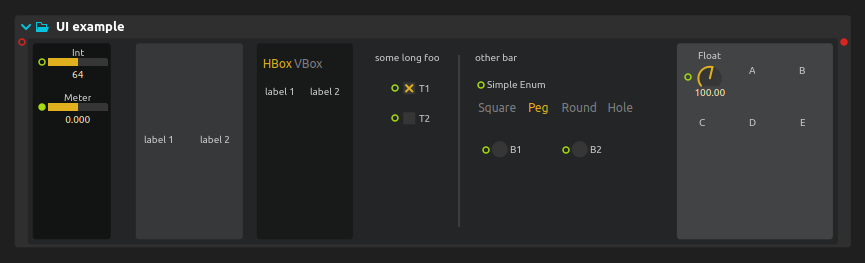

Here is how it looks like when compiled against the ossia score backend:

Getting started

Here is a minimal, self-contained definition of an Avendish processor:

import std;

[[name: "Hello World"]]

export struct MyProcessor

{

void operator()() {

std::print("Henlo\n");

}

};

... at least, in an alternative universe where C++ has gotten custom attributes and reflection on those,

and where modules and std::print work consistently across all compilers ; in our universe, this is still a few years away. Keep hope, dear reader, keep hope -- and read until the end of the page!

Getting started, for good

Here is a minimal, self-contained definition of an Avendish processor, which works on 2022 compilers:

#pragma once

#include <cstdio>

struct MyProcessor

{

static consteval auto name() { return "Hello World"; }

void operator()() {

printf("Henlo\n");

}

};

Yes, it's not much. You may even already have some in your codebase without even being aware of it !

Now, you may be used to the usual APIs for making audio plug-ins and start wondering about all the things you are used too and that are missing here:

- Inheritance or shelving function pointers in a C struct.

- Libraries: defining an Avendish processor does not in itself require including anything. A central point of the system is that everything can be defined through bare C++ constructs, without requiring the user to import types from a library. A library of helpers is nonetheless provided, to simplify some repetitive cases, but is in no way mandatory ; if anything, I encourage anyone to try to make different helper APIs that fit different coding styles.

- Functions to process audio such as

void process(double** inputs, double** outpus, int frames);

We'll see how all the usual amenities can be built on top of this and simple C++ constructs such as variables, methods and structures.

Line by line

// This line is used to instruct the compiler to not include a header file multiple times.

#pragma once

// This line is used to allow our program to use `printf`:

#include <cstdio>

// This line declares a struct named MyProcessor. A struct can contain functions, variables, etc.

// It could also be a class - in C++, there is no strong semantic difference between either.

struct MyProcessor

{

// This line declares a function that will return a visible name to show to our

// users.

// - static is used so that an instance of MyProcessor is not needed:

// we can just refer to the function as MyProcessor::name();

// - consteval is used to enforce that the function can be called at compile-time,

// which may enable optimizations in the backends that will generate plug-ins.

// - auto because it does not matter much here, we know that this is a string :-)

static consteval auto name() { return "Hello World"; }

// This line declares a special function that will allow our processor to be executed as follows:

//

// MyProcessor the_processor;

// the_processor();

//

// ^ the second line will call the "operator()" function.

void operator()()

{

// This one should hopefully be obvious :-)

printf("Henlo\n");

}

};

2025 update: it's happening!

C++ reflection and custom attributes / annotations will be part of C++26! The actual syntax once support for it is implemented in Avendish, will certainly look like:

import std;

import metadata;

[[=metadata::name{"Hello World"}]]

[[=metadata::description{"An introductory example"}]]

export struct MyProcessor

{

void operator()() {

std::print("Henlo\n");

}

};

Thanks to the library's open-ended approach, all existing code will continue working :-)

Compiling our processor

Environment set-up

Before anything, we need a C++ compiler. The recommandation is to use Clang (at least clang-13). GCC 11 also works with some limitations. Visual Studio is sadly still not competent enough.

- On Windows, through llvm-mingw.

- On Mac, through Xcode.

- On Linux, through your distribution packages.

Avendish's code is header-only ; however, CMake automatizes correctly linking to the relevant libraries, and generates a correct entrypoint for the targeted bindings, thus we recommend installing it.

Ninja is recommended: it makes the build faster. Below are a few useful set-up commands for various operating systems.

ArchLinux, Manjaro, etc

$ sudo pacman -S base-devel cmake ninja clang gcc boost

Debian, Ubuntu, etc

$ sudo apt install build-essential cmake ninja-build libboost-dev

macOS with Homebrew

Xcode is required. Then:

$ brew install cmake ninja boost

Windows with MSYS2 / MinGW

$ pacman -S pactoys

$ pacboy -S cmake:p ninja:p toolchain:p boost:p

Install backend-specific dependencies

The APIs and SDK that you wish to create plug-ins / bindings for must also be available:

- PureData: needs the PureData API.

m_pd.handpd.libmust be findable throughCMAKE_PREFIX_PATH.- On Linux this is automatic if you install PureData through your distribution.

- Max/MSP: needs the Max SDK.

- Pass

-DAVND_MAXSDK_PATH=/path/to/max/sdkto CMake.

- Pass

- Python: needs pybind11.

- Installable through most distro's repos.

- ossia: needs libossia.

- clap: needs clap.

- UIs can be built with Qt or Nuklear.

- Qt is installable easily through aqtinstall.

- VST3: needs the Steinberg VST3 SDK.

- Pass

-DVST3_SDK_ROOT=/path/to/vst3/sdkto CMake.

- Pass

- By default, plug-ins compatible with most DAWs through an obsolete, Vintage, almost vestigial, API will be built. This does not require any specific dependency to be installed, on the other hand it only supports audio plug-ins.

Building the template

The simplest way to get started is from the template repository: simply clear the Processor.cpp file for now and put the content in Processor.hpp.

Here's a complete example (from bash):

$ git clone https://github.com/celtera/avendish-audio-processor-template

$ mkdir build

$ cd build

$ cmake ../avendish-audio-processor-template

$ ninja # or make -j8

This should produce various binaries in the build folder: for instance, a PureData object (in build/pd), a Python one (in build/python, etc.).

Running the template in Python

Once the processor is built, we can for instance run it in Python:

Let's try to run our processor through the Python bindings:

$ cd build/python

# Check that our processor was built correctly

$ ls

pymy_processor.so

# Run it

$ python

>>> import pymy_processor

>>> proc = pymy_processor.Hello_World()

>>> proc.process()

Henlo

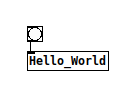

Running the template in PureData

Similarly, one can run the template in PureData:

$ cd build/pd

# Check that our processor was built correctly

$ ls

my_processor.l_ia64

# Run it

$ pd -lib my_processor

Make the following patch:

When sending a bang, the terminal in which PureData was launched should also print "Henlo". We'll see in a later chapter how to print on Pd's own console instead.

Running in DAWs

We could, but so far our object is not really an object that makes sense in a DAW: it does not process audio in any way. We'll see in further chapters how to make audio objects.

Adding ports

Supported bindings: all

Our processor so far does not process much. It just reacts to an external trigger, to invoke a print function.

Note that the way this trigger is invoked varies between environments: in Python, we called a

process()function, while in PureData, we sent a bang to our object. That is one of the core philosophies of Avendish: bindings should make it so that the object fits as much as possible with the environment's semantics and idioms.

Most actual media processing systems work with the concept of ports to declare inputs and outputs, and Avendish embraces this fully.

Here is the code of a simple processor, which computes the sum of two numbers.

struct MyProcessor

{

static consteval auto name() { return "Addition"; }

struct

{

struct { float value; } a;

struct { float value; } b;

} inputs;

struct

{

struct { float value; } out;

} outputs;

void operator()() { outputs.out.value = inputs.a.value + inputs.b.value; }

};

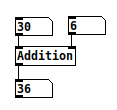

Compiling and running this yields for instance a PureData object which can be used like this:

Note that the object respects the usual semantics of PureData: sending a message to the leftmost inlet will trigger the computation. Sending a message to the other inlets will store the value internally but won't trigger the actual computation.

For some objects, other semantics may make sense: creating an alternative binding to PureData which would implement another behaviour, such as triggering the computation only on "bang" messages, or on any input on any inlet, would be a relatively easy task.

Let's try in Python:

>>> import pymy_processor

>>> proc = pymy_processor.Addition()

>>> proc.process()

>>> p.input_0 = 123

>>> p.input_1 = 456

>>> p.process()

>>> p.output_0

579.0

Here the semantics follow usual "object" ones. You set some state on the object and call methods on it, which may change this state.

One could also make a binding that implements functional semantics, by passing the state of the processor as an immutable object instead. Python is already slow enough, though :p

Syntax explanation

Some readers may be surprised by the following syntax:

struct { float value; } a;

What it does is declare a variable a whose type is an unnamed structure.

Note that this is a distinct concept from anonymous structures:

struct { float value; };

which are legal in C but not in C++ (although most relevant compilers accept them), and are mostly useful for implementing unions:

union vec3 {

struct { float x, y, z; };

struct { float r, g, b; };

};

union vec3 v;

v.x = 1.0;

v.g = 2.0;

Motivation for using unnamed structures in Avendish is explained afterwards.

Naming things

In an ideal world, what we would have loved is writing the following code:

struct MyProcessor

{

static consteval auto name() { return "Addition"; }

struct

{

float a;

float b;

} inputs;

struct

{

float out;

} outputs;

void operator()() { outputs.out = inputs.a + inputs.b; }

};

and have our Python processor expose us variables named a, b and out. Sadly, without reflection on names, this is not possible yet. Thus, in the meantime we use structs to embed metadata relative to the ports:

struct MyProcessor

{

static consteval auto name() { return "Addition"; }

struct

{

struct {

static consteval auto name() { return "a"; }

float value;

} a;

struct {

static consteval auto name() { return "b"; }

float value;

} b;

} inputs;

struct

{

struct {

static consteval auto name() { return "out"; }

float value;

} out;

} outputs;

void operator()() { outputs.out.value = inputs.a.value + inputs.b.value; }

};

Now our Python example is cleaner to use:

>>> import pymy_processor

>>> proc = pymy_processor.Addition()

>>> proc.process()

>>> p.a = 123

>>> p.b = 456

>>> p.process()

>>> p.out

579.0

Refactoring

One can see how writing:

struct {

static consteval auto name() { return "foobar"; }

float value;

} foobar;

for 200 controls would get boring quick. In addition, the implementation of our processing function is not as clean as we'd want: in an ideal world, it would be just:

void operator()() { outputs.out = inputs.a + inputs.b; }

Thankfully, we can introduce our own custom abstractions without breaking anything: the only thing that matters is that they follow the "shape" of what a parameter is.

This shape is defined (as a first approximation) as follows:

template<typename T>

concept parameter = requires (T t) { t.value = {}; };

In C++ parlance, this means that a type can be recognized as a parameter if

- It has a member called

value. - This member is assignable with some default value.

For instance:

struct bad_1 {

const int value;

};

struct bad_2 {

void value();

};

class bad_3 {

int value;

};

are all invalid parameters.

This can be ensured easily by asking the compiler:

static_assert(!parameter<bad_1>);

static_assert(!parameter<bad_2>);

static_assert(!parameter<bad_3>);

static_assertis a C++ feature which allows to check a predicate at compile-time. If the predicate is false, the compiler will report an error.

Avendish will simply not recognize them and they won't be accessible anywhere.

Here are examples of valid parameters:

struct good_1 {

int value;

};

struct good_2 {

std::string value;

};

template<typename T>

struct assignable {

T& operator=(T x) {

printf("I changed !");

this->v = x;

return this->v;

}

T v;

};

class good_3 {

public:

assignable<double> value;

};

This can be ensured again by asking the compiler:

static_assert(parameter<good_1>);

static_assert(parameter<good_2>);

static_assert(parameter<good_3>);

Avendish provides an helper library, halp (Helper Abstractions for Literate Programming), which match this pattern. However, users are encouraged to develop their own abstractions that fit their preferred coding style :-)

Ports with the helper library

Here is how our processor looks with the current set of helpers:

#pragma once

#include <halp/controls.hpp>

struct MyProcessor

{

// halp_meta(A, B) expands to static consteval auto A() { return B; }

halp_meta(name, "Addition")

// In a perfect world one would just define attributes on the struct instead...

//

// [[name: "Addition"]]

// struct MyProcessor { ... };

//

// or more realistically, `static constexpr auto name = "foo";`

// which would be an acceptable compromise.

struct

{

// val_port is a simple type which contains

// - a member value of type float

// - the name() metadata method

// - helper operators to allow easy assignment and use of the value.

halp::val_port<"a", float> a;

halp::val_port<"b", float> b;

} inputs;

struct

{

halp::val_port<"out", float> out;

} outputs;

void operator()() { outputs.out = inputs.a + inputs.b; }

};

If one really does not like templates, the following macro could be defined instead to make custom ports:

#define my_value_port(Name, Type) \

struct { \

static consteval auto name() { return #Name; } \

Type value; \

} Name;

// Used like:

my_value_port(a, float)

my_value_port(b, std::string)

... etc ...

Likewise if one day the metaclasses proposal comes to pass, it will be possible to convert:

meta_struct

{

float a;

float b;

} inputs;

into a struct of the right shape, automatically, at compile-time, and all the current bindings will keep working.

Port metadatas

Our ports so far are very simple: floating-point values, without any more information attached than a name to show to the user.

Most of the time, we'll want to attach some semantic metadata to the ports: for instance, a range of acceptable values, the kind of UI widget that should be shown to the user, etc.

Defining a min/max range

Supported bindings: all

Here is how one can define a port with such a range:

struct {

static consteval auto name() { return "foobar"; }

struct range {

float min = -1.;

float max = 1.;

float init = 0.5;

};

float value{};

} foobar;

Here is another version which will be picked up too:

struct {

static consteval auto name() { return "foobar"; }

static consteval auto range() {

struct {

float min = -1.;

float max = 1.;

float init = 0.5;

} r;

return r;

};

float value{};

} foobar;

More generally, in most cases, Avendish will try to make sense of the things the author declares, whether they are types, variables or functions. This is not implemented entirely consistently yet, but it is a goal of the library in order to enable various coding styles and as much freedom of expression as possible for the media processor developer.

Keeping metadata static

Note that we should still be careful in our struct definitions to not declare normal member variables for common metadata, which would take valuable memory and mess with our cache lines. This reduces performance for no good reason: imagine instantiating 10000 "processor" objects, you do not want each processor to carry the overhead of storing the range as a member variable, such as this:

struct {

const char* name = "foobar";

struct {

float min = -1.;

float max = 1.;

float init = 0.5;

} range;

float value{};

} foobar;

// In this case:

static_assert(sizeof(foobar) == 4 * sizeof(float) + sizeof(const char*));

// sizeof(foobar) == 24 on 64-bit systems

// While in the previous cases, the "name" and "range" information is stored in a static space in the binary ; its cost is paid only once:

static_assert(sizeof(foobar) == sizeof(float));

// sizeof(foobar) == 4

Testing on a processor

If we modify our example processor this way:

struct MyProcessor

{

static consteval auto name() { return "Addition"; }

struct

{

struct {

static consteval auto name() { return "a"; }

struct range {

float min = -10.;

float max = 10.;

float init = 0.;

};

float value;

} a;

struct {

static consteval auto name() { return "b"; }

struct range {

float min = -1.;

float max = 1.;

float init = 0.;

};

float value;

} b;

} inputs;

struct

{

struct {

static consteval auto name() { return "out"; }

float value;

} out;

} outputs;

void operator()() { outputs.out.value = inputs.a.value + inputs.b.value; }

};

then some backends will start to be able to do interesting things, like showing relevant UI widgets, or clamping the inputs / outputs.

This is not possible in all back-ends, sadly. Consider for instance PureData: the way one adds a port is by passing a pointer to a floating-point value to Pd, which will write directly the inbound value at the memory address: there is no point at which we could plug-in to perform clamping of the value.

Two alternatives would be possible in this case:

- Change the back-end to instead expect all messages on the first inlet, as those can be captured. This would certainly yield lower performance as one now would have to pass a symbol indicating the parameter so that the object knows to which port the input should map.

- Implement an abstraction layer which would duplicate the parameters with their clamped version, and perform the clamping on all parameters whenever the process function gets called. This would however be hurtful in terms of performance and memory use.

Defining UI widgets

Supported bindings: ossia

Avendish can recognize a few names that will indicate that a widget of a certain type must be created.

For instance:

struct {

enum { knob };

static consteval auto name() { return "foobar"; }

struct range {

float min = -1.;

float max = 1.;

float init = 0.5;

};

float value{};

} foobar;

Simply adding the enum definition in the struct will allow the bindings to detect it at compile-time, and instantiate an appropriate UI control.

The following widget names are currently recognized:

bang, impulse

button, pushbutton

toggle, checkbox,

hslider, vslider, slider

spinbox,

knob,

lineedit,

choices, enumeration

combobox, list

xy, xy_spinbox,

xyz, xyz_spinbox,

color,

time_chooser,

hbargraph, vbargraph, bargraph,

range_slider, range_spinbox

This kind of widget definition is here to enable host DAWs to automatically generate appropriate UIs automatically.

A further chapter will present how to create entirely custom painted UIs and widgets.

Defining a mapping function

Supported bindings: ossia

It is common in audio to have a range which is best manipulated by the user in logarithmic space, for instance for adjusting frequencies, as the human hear is more sensitive to a 100Hz variation around 100Hz, than around 15kHz.

We can define custom mapping functions as part of the control, which will define how the UI controls shall behave:

struct {

static consteval auto name() { return "foobar"; }

struct mapper {

static float map(float x) { return std::pow(x, 10.); }

static float unmap(float y) { return std::pow(y, 1./10.); }

};

struct range {

float min = 20.;

float max = 20000.;

float init = 100.;

};

float value{};

} foobar;

The mapping function must be a bijection of [0; 1] unto itself.

That is, the map and unmap function you choose must satisfy:

for all x in [0; 1] map(x) is in [0; 1]for all x in [0; 1] unmap(x) is in [0; 1]for all x in [0; 1] unmap(map(x)) == xfor all x in [0; 1] map(unmap(x)) == x

For instance, sin(x) or ln(x) do not work, but the x^N / x^(1/N) couple works.

Note that for now, mappings are only supported for floating-point sliders and knobs, in ossia.

With helpers

A few pre-made mappings are provided: the above could be rewritten as:

struct : halp::hslider_f32<"foobar", halp::range{20., 20000., 100.}> {

using mapper = halp::pow_mapper<10>;

} foobar;

The complete list is provided in #include <halp/mappers.hpp>:

// #include: <halp/mappers.hpp>

#pragma once

#include <cmath>

#include <halp/modules.hpp>

#include <ratio>

HALP_MODULE_EXPORT

namespace halp

{

// FIXME: when clang supports double arguments we can use them here instead

template <typename Ratio>

struct log_mapper

{

// Formula from http://benholmes.co.uk/posts/2017/11/logarithmic-potentiometer-laws

static constexpr double mid = double(Ratio::num) / double(Ratio::den);

static constexpr double b = (1. / mid - 1.) * (1. / mid - 1.);

static constexpr double a = 1. / (b - 1.);

static double map(double v) noexcept { return a * (std::pow(b, v) - 1.); }

static double unmap(double v) noexcept { return std::log(v / a + 1.) / std::log(b); }

};

template <int Power>

struct pow_mapper

{

static double map(double v) noexcept { return std::pow(v, (double)Power); }

static double unmap(double v) noexcept { return std::pow(v, 1. / Power); }

};

template <typename T>

struct inverse_mapper

{

static double map(double v) noexcept { return T::unmap(v); }

static double unmap(double v) noexcept { return T::map(v); }

};

}

Smoothing values

Supported bindings: ossia

It is common to require controls to be smoothed over time, in order to prevent clicks and pops in the sound.

Avendish allows this, by defining a simple smoother struct which specifies over how many milliseconds the control changes must be smoothed.

struct {

static consteval auto name() { return "foobar"; }

struct smoother {

float milliseconds = 10.;

};

struct range {

float min = 20.;

float max = 20000.;

float init = 100.;

};

float value{};

} foobar;

It is also possible to get precise control over the smoothing ratio, depending on the control update rate (sample rate or buffer rate).

Helpers

Helper types are provided:

// #include: <halp/smoothers.hpp>

#pragma once

#include <cmath>

#include <halp/modules.hpp>

HALP_MODULE_EXPORT

namespace halp

{

// A basic smooth specification

template <int T>

struct milliseconds_smooth

{

static constexpr float milliseconds{T};

// Alternative:

// static constexpr std::chrono::milliseconds duration{T};

};

// Same thing but explicit control over the smoothing

// ratio

template <int T>

struct exp_smooth

{

static const constexpr double pi = 3.141592653589793238462643383279502884;

static constexpr auto ratio(double sample_rate) noexcept

{

return std::exp(-2. * pi / (T * 1e-3 * sample_rate));

}

};

}

Usage example

These examples smooth the gain control parameter, either over a buffer in the first case, or for each sample in the second case.

#pragma once

/* SPDX-License-Identifier: GPL-3.0-or-later */

#include <halp/audio.hpp>

#include <halp/controls.hpp>

#include <halp/meta.hpp>

#include <halp/smoothers.hpp>

#include <vector>

namespace examples::helpers

{

/**

* Smooth gain

*/

class SmoothGainPoly

{

public:

halp_meta(name, "Smooth Gain")

halp_meta(c_name, "avnd_helpers_smooth_gain")

halp_meta(uuid, "032e1734-f84a-4eb2-9d14-01fc3dea4c14")

using setup = halp::setup;

using tick = halp::tick;

struct

{

halp::dynamic_audio_bus<"Input", double> audio;

struct : halp::hslider_f32<"Gain", halp::range{.min = 0., .max = 1., .init = 0.5}>

{

struct smoother

{

float milliseconds = 20.;

};

} gain;

} inputs;

struct

{

halp::dynamic_audio_bus<"Output", double> audio;

} outputs;

void prepare(halp::setup info) { }

// Do our processing for N samples

void operator()(halp::tick t)

{

// Process the input buffer

for(int i = 0; i < inputs.audio.channels; i++)

{

auto* in = inputs.audio[i];

auto* out = outputs.audio[i];

for(int j = 0; j < t.frames; j++)

{

out[j] = inputs.gain * in[j];

}

}

}

};

class SmoothGainPerSample

{

public:

halp_meta(name, "Smooth Gain")

halp_meta(c_name, "avnd_helpers_smooth_gain")

halp_meta(uuid, "032e1734-f84a-4eb2-9d14-01fc3dea4c14")

struct inputs

{

halp::audio_sample<"Input", double> audio;

struct : halp::hslider_f32<"Gain", halp::range{.min = 0., .max = 1., .init = 0.5}>

{

using smooth = halp::milliseconds_smooth<20>;

} gain;

};

struct outputs

{

halp::audio_sample<"Output", double> audio;

};

// Do our processing for N samples

void operator()(const inputs& inputs, outputs& outputs)

{

outputs.audio.sample = inputs.audio.sample * inputs.gain;

}

};

}

Widget helpers

Supported bindings: depending on the underlying data type

To simplify the common use case of defining a port such as "slider with a range", a set of common helper types is provided.

Here is our example, now as refined as it can be ; almost no character is superfluous or needlessly repeated except the names of controls:

#pragma once

#include <halp/controls.hpp>

struct MyProcessor

{

halp_meta(name, "Addition")

struct

{

halp::hslider_f32<"a", halp::range{.min = -10, .max = 10, .init = 0}> a;

halp::knob_f32<"b" , halp::range{.min = -1, .max = 1, .init = 0}> b;

} inputs;

struct

{

halp::hbargraph_f32<"out", halp::range{.min = -11, .max = 11, .init = 0}> out;

} outputs;

void operator()() { outputs.out = inputs.a + inputs.b; }

};

This is how an environment such as ossia score renders it:

Note that even with our helper types, the following holds:

static_assert(sizeof(MyProcessor) == 3 * sizeof(float));

That is, an instance of our object weighs in memory exactly the size of its inputs and outputs, nothing else. In addition, the binding libraries try extremely hard to not allocate any memory dynamically, which leads to very concise memory representations of our media objects.

Port update callback

Supported bindings: ossia

It is possible to get a callback whenever the value of a (value) input port gets updated, to perform complex actions.

update will always be called before the current tick starts.

The port simply needs to have a void update(T&) { } method implemented, where T will be the object containing the port:

Example

struct MyProcessor

{

static consteval auto name() { return "Addition"; }

struct

{

struct {

static consteval auto name() { return "a"; }

void update(MyProcessor& proc) { /* called when 'a.value' changes */ }

float value;

} a;

struct {

static consteval auto name() { return "b"; }

void update(MyProcessor& proc) { /* called when 'b.value' changes */ }

float value;

} b;

} inputs;

struct

{

struct {

static consteval auto name() { return "out"; }

float value;

} out;

} outputs;

void operator()() { outputs.out.value = inputs.a.value + inputs.b.value; }

};

Usage with helpers

To add an update method to an helper, simply inherit from them:

#pragma once

#include <halp/controls.hpp>

struct MyProcessor

{

halp_meta(name, "Addition")

struct

{

struct : halp::val_port<"a", float> {

void update(MyProcessor& proc) { /* called when 'a.value' changes */ }

} a;

halp::val_port<"b", float> b;

} inputs;

struct

{

halp::val_port<"out", float> out;

} outputs;

void operator()() { outputs.out = inputs.a + inputs.b; }

};

Writing audio processors

Supported bindings: ossia, vst, vst3, clap, Max, Pd

The processors we wrote until now only processed "control" values.

As a convention, those are values that change infrequently, relative to the audio rate: every few milliseconds, as opposed to every few dozen microseconds for individual audio samples.

Argument-based processors

Let's see how one can write a simple audio filter in Avendish:

struct MyProcessor

{

static consteval auto name() { return "Distortion"; }

float operator()(float input)

{

return std::tanh(input);

}

};

That's it. That's the processor :-)

Maybe you are used to writing processors that operate with buffers of samples. Fear not, here is another valid Avendish audio processor, which should reassure most readers:

struct MyProcessor

{

static consteval auto name() { return "Distortion"; }

static consteval auto input_channels() { return 2; }

static consteval auto output_channels() { return 2; }

void operator()(double** inputs, double** outputs, int frames)

{

for (int c = 0; c < input_channels(); ++c)

{

for (int k = 0; k < frames; k++)

{

outputs[c][k] = std::tanh(inputs[c][k]);

}

}

}

};

The middle-ground of a processor that processes a single channel is also possible (and so is the possibility to use floats or doubles for the definition of the processor):

struct MyProcessor

{

static consteval auto name() { return "Distortion"; }

void operator()(float* inputs, float* outputs, int frames)

{

for (int k = 0; k < frames; k++)

{

outputs[k] = std::tanh(inputs[k]);

}

}

};

Those are all ways that enable quickly writing very simple effects (although a lot of ground is already covered). For more advanced systems, with side-chains and such, it is preferable to use proper ports instead.

Port-based processors

Here are three examples of valid audio ports:

- Sample-wise

struct {

static consteval auto name() { return "In"; }

float sample{};

};

- Channel-wise

struct {

static consteval auto name() { return "Out"; }

float* channel{};

};

- Bus-wise, with a fixed channel count. Here, bindings will ensure that there are always as many channels allocated.

struct {

static consteval auto name() { return "Ins"; }

static constexpr int channels() { return 2; }

float** samples{}; // At some point this should be renamed bus...

};

- Bus-wise, with a modifiable channel count. Here, bindings will put exactly as many channels as the end-user of the software requested ; this count will be contained in

channels.

struct {

static consteval auto name() { return "Outs"; }

int channels = 0;

double** samples{}; // At some point this should be renamed bus...

};

An astute reader may wonder why one could not fix a channel count by doing

const int channels = 2;instead ofint channels() { return 2; };. Sadly, this would make our types non-assignable, which makes things harder. It would also use bytes for each instance of the processor. A viable middle-ground could bestatic constexpr int channels = 2;but C++ does not allow static variables in unnamed types, thus this does not leave a lot of choice.

Process function for ports

For ports-based processor, the process function takes the number of frames as argument. Here is a complete, bare example of a gain processor.

struct Gain {

static constexpr auto name() { return "Gain"; }

struct {

struct {

static constexpr auto name() { return "Input"; }

const double** samples;

int channels;

} audio;

struct {

static constexpr auto name() { return "Gain"; }

struct range {

const float min = 0.;

const float max = 1.;

const float init = 0.5;

};

float value;

} gain;

} inputs;

struct {

struct {

static constexpr auto name() { return "Output"; }

double** samples;

int channels;

} audio;

} outputs;

void operator()(int N) {

auto& in = inputs.audio.samples;

auto& out = outputs.audio.samples;

for (int i = 0; i < p1.channels; i++)

for (int j = 0; j < N; j++)

out[i][j] = inputs.gain.value * in[i][j];

}

};

Helpers

halp provides helper types for these common cases:

halp::audio_sample<"A", double> audio;

halp::audio_channel<"B", double> audio;

halp::fixed_audio_bus<"C", double, 2> audio;

halp::dynamic_audio_bus<"D", double> audio;

Important: it is not possible to mix different types of audio ports in a single processor: audio sample and audio bus operate necessarily on different time-scales that are impossible to combine in a single function. Technically, it would be possible to combine audio channels and audio buses, but for the sake of simplicity this is currently forbidden.

Likewise, it is forbidden to mix float and double inputs for audio ports (as it simply does not make sense: no host in existence is able to provide audio in two different formats at the same time).

Gain processor, helpers version

The exact same example as above, just shorter to write :)

struct Gain {

static constexpr auto name() { return "Gain"; }

struct {

halp::dynamic_audio_bus<"Input", double> audio;

halp::hslider_f32<"Gain", halp::range{0., 1., 0.5}> gain;

} inputs;

struct {

halp::dynamic_audio_bus<"Output", double> audio;

} outputs;

void operator()(int N) {

auto& in = inputs.audio;

auto& out = outputs.audio;

const float gain = inputs.gain;

for (int i = 0; i < in.channels; i++)

for (int j = 0; j < N; j++)

out[i][j] = gain * in[i][j];

}

};

Further work

We currently have the following matrix of possible forms of audio ports:

| 1 channel | N channels | |

|---|---|---|

| 1 frame | float sample; | ??? |

| N frames | float* channel; | float** samples; |

For the N channels / 1 frame case, one could imagine for instance:

struct {

float bus[2]; // Fixed channels case

}

or

struct {

float* bus; // Dynamic channels case

}

to indicate a per-sample, multi-channel bus, but this has not been implemented yet.

Monophonic processors

Supported bindings: ossia, vst, vst3, clap, Max

There are three special cases:

- Processors with one sample input and one sample output.

- Processors with one channel input and one channel output.

- Processors with one dynamic bus input, one dynamic bus output, and no fixed channels being specified.

In these three cases, the processor is recognized as polyphony-friendly. That means that in cases 1 and 2, the processor will be instantiated potentially multiple times automatically, if used in e.g. a stereo DAW.

In case 3, the channels of inputs and outputs will be set to the same count, which comes from the host.

Polyphonic processors should use types for their I/O

Let's consider the following processor:

struct MyProcessor

{

static consteval auto name() { return "Distortion"; }

struct {

struct { float value; } gain;

} inputs;

double operator()(double input)

{

accumulator = std::fmod(accumulator+1.f, 10.f);

return std::tanh(inputs.gain.value * input + accumulator);

}

private:

double accumulator{};

};

We have three different values involved:

inputis the audio sample that is to be processed.inputs.gain.valueis an external control which increases or decreases the distortion.accumulatoris an internal variable used by the processing algorithm.

Now consider this in the context of polyphony: the only thing that we can do is instantiate MyProcessor three times.

- We cannot call

operator()of a single instance on multiple channels, as the internal state must stay independent of the channels. - But now the inputs are duplicated for all instances. If we want to implement a filter bank with thousands of duplicated processors in parallel, this would be a huge waste of memory if they all depend on the same

gainvalue.

Thus, it is recommended in this case to use the following form:

struct MyProcessor

{

static consteval auto name() { return "Distortion"; }

struct inputs {

struct { float value; } gain;

};

struct outputs { };

double operator()(double input, const inputs& ins, outputs& outs)

{

accumulator = std::fmod(accumulator+1.f, 10.f);

return std::tanh(ins.gain.value * input + accumulator);

}

private:

double accumulator{};

};

Here, Avendish will instantiate a single inputs array, which will be shared across all polyphony voices, which will likely use less memory and be more performant in case of large amount of parameters & voices.

Here is what I would term the "canonic" of this version, with additionally our helpers to reduce typing, and the audio samples passed through ports instead of through arguments:

struct MyProcessor

{

static consteval auto name() { return "Distortion"; }

struct inputs {

halp::audio_sample<"In", double> audio;

halp::hslider_f32<"Gain", halp::range{.min = 0, .max = 100, .init = 1}> gain;

};

struct outputs {

halp::audio_sample<"Out", double> audio;

};

void operator()(const inputs& ins, outputs& outs)

{

accumulator = std::fmod(accumulator + 0.01f, 10.f);

outs.audio = std::tanh(ins.gain * ins.audio + accumulator);

}

private:

double accumulator{};

};

Passing inputs and outputs as types is also possible for all the other forms described previously - everything is possible, write your plug-ins as it suits you best :) and who knows, maybe with metaclasses one would also be able to generate the more efficient form directly.

Audio setup

Supported bindings: ossia, vst, vst3, clap, Max, Pd

It is fairly common for audio systems to need to have some buffers allocated or perform pre-computations depending on the sample rate and buffer size of the system.

This can be done by adding the following method in the processor:

void prepare(/* some_type */ info) {

...

}

some_type can be a custom type with the following allowed fields:

rate: will be filled with the sample rate.frames: will be filled with the maximum frame (buffer) size.input_channels/output_channels: for processors with unspecified numbers of channels, it will be notified here.- Alternatively, just specifying

channelsworks too if inputs and outputs are expected to be the same. instance: allows to give processor instances an unique identifier which, if the host supports it, will be serialized / deserialized across restarts of the host and thus stay constant.

Those variables must be assignable, and are all optional (remember the foreword: Avendish is UNCOMPROMISING).

Here are some valid examples:

- No member at all: this can be used to just notify the processor than processing is about to start.

struct setup_a { };

void prepare(setup_a info) {

...

}

- Most common use case

struct setup_b {

float rate{};

int frames{};

};

void prepare(setup_b info) {

...

}

- For variable channels in simple audio filters:

struct setup_c {

float rate{};

int frames{};

int channels{};

};

void prepare(setup_c info) {

...

}

Helper library

halp provides the halp::setup which covers the most usual use cases:

void prepare(halp::setup info) {

info.rate; // etc...

}

How does this work ?

If you are interested in the implementation, it is actually fairly simple.

- First we extract the function arguments of

prepareif the function exists (seeavnd/common/function_reflection.hppfor the method), to get the typeTof the first argument. - Then we do the following if it exists:

using type = /* type of T in prepare(T t) */;

if constexpr(requires (T t) { t.frames = 123; })

t.frames = ... the buffer size reported by the DAW ...;

if constexpr(requires (T t) { t.rate = 44100; })

t.rate = ... the sample-rate reported by the DAW ...;

This way, only the cost of the variables that are actually used by the algorithm is ever incurred, which is of course not super important but a good reference implementation for this way of doing for other parts of the system where it matters more.

Audio arguments

Supported bindings: ossia, vst, vst3, clap, Max, Pd

In addition of the global set-up step, one may require per-process-step arguments. Most common needs are for instance the current tempo, etc.

The infrastructure put in place for this is very similar to the one previously mentioned for the setup step.

The way it is done is simply by passing it as the last argument of the processing operator() function.

If there is such a type, it will contain at least the frames.

Note: due to a lazy developer, currently this type has to be called

tick.

Example:

struct MyProcessor {

...

struct tick {

int frames;

double tempo;

};

void operator()(tick tick) { ... }

float operator()(float in, tick tick) { ... }

void operator()(float* in, float* out, tick tick) { ... }

void operator()(float** in, float** out, tick tick) { ... }

// And also the versions that take input and output types as arguments

void operator()(const inputs& in, outputs& out, tick tick) { ... }

float operator()(float in, const inputs& in, outputs& out, tick tick) { ... }

void operator()(float* in, float* out, const inputs& in, outputs& out, tick tick) { ... }

void operator()(float** in, float** out, const inputs& in, outputs& out, tick tick) { ... }

// And also the double-taking versions, not duplicated here :-)

};

The currently supported members are:

frames: the buffer size

The plan is to introduce:

tempoand all things relative to musicality, e.g. current bar, etc.- But first we have to define it in a proper way, which is compatible with VST, CLAP, etc.

time_since_start- and other similar timing-related things which will all be able to be opt-in.

Audio FFT

Supported bindings: ossia

It is pretty common for audio analysis tasks to need access to the audio spectrum.

However, this causes a dramatic situation at the ecosystem level: every plug-in ships with its own FFT implementation, which at best duplicates code for no good reason, and at worse may cause complex issues for FFT libraries which rely on global state shared across the process, such as FFTW.

With the declarative approach of Avendish, however, we can make it so that the plug-in does not directly depend on an FFT implementation: it just requests that a port gets its spectrum computed, and it happens automatically outside of the plug-in's code. This allows hosts such as ossia score to provide their own FFT implementation which will be shared across all Avendish plug-ins, which is strictly better for performance and memory usage.

Making an FFT port

This can be done by extending an audio input port (channel or bus) with a spectrum member.

For instance, given:

struct {

float** samples{};

int channels{};

} my_audio_input;

One can add the following spectrum struct:

struct {

float** samples{};

struct {

float** amplitude{};

float** phase{};

} spectrum;

int channels{};

} my_audio_input;

to get a deinterleaved view of the amplitude & phase:

spectrum.amplitude[0][4]; // The amplitude of the bin at index 4 for channel 0

spectrum.phase[1][2]; // The phase of the bin at index 2 for channel 1

It is also possible to use complex numbers instead:

struct {

double** samples{};

struct {

std::complex<double>** bins;

} spectrum;

int channels{};

} my_audio_input;

spectrum.bins[0][4]; // The complex value of the bin at index 4 for channel 0

Using complex numbers allows to use the C++ standard math library functions for complex numbers: std::norm, std::proj...

Note that the length of the spectrum arrays is always N / 2 + 1, N being the current frame size. Note also that the FFT is normalized - the input is divided by the amount of samples.

WIP: an important upcoming feature is the ability to make configurable buffered processors, so that one can choose for instance to observe the spectrum over 1024 frames. Right now this has to be handled internally by the plug-in.

Windowing

A window function can be applied by defining an

enum window { <name of the window> };

Supported names currently are hanning, hamming. If there is none, there will be no windowing (technically, a rectangular window). The helper types described below use an Hanning window.

WIP: process with the correct overlap for the window size, e.g. 0.5 for Hanning, 0.67 for Hamming etc. once buffering is implemented.

Helper types

These three types are provided. They give separated amplitude / phase arrays.

halp::dynamic_audio_spectrum_bus<"A", double> a_bus_port;

halp::fixed_audio_spectrum_bus<"B", double, 2> a_stereo_port;

halp::audio_spectrum_channel<"C", double> a_channel_port;

Accessing a FFT object globally

See the section about feature injection to see how a plug-in can be injected with an FFT object which allows to control precisely how the FFT is done.

Example

#pragma once

#include <cmath>

#include <halp/audio.hpp>

#include <halp/controls.hpp>

#include <halp/fft.hpp>

#include <halp/meta.hpp>

namespace examples::helpers

{

/**

* For the usual case where we just want the spectrum of an input buffer,

* no need to template: we can ask it to be precomputed beforehand by the host.

*/

struct PeakBandFFTPort

{

halp_meta(name, "Peak band (FFT port)")

halp_meta(c_name, "avnd_peak_band_fft_port")

halp_meta(uuid, "143f5cb8-d0b1-44de-a1a4-ccd5315192fa")

// TODO implement user-controllable buffering to allow various fft sizes...

int buffer_size = 1024;

struct

{

// Here the host will fill audio.spectrum with a windowed FFT.

// Option A (an helper type is provided)

halp::audio_spectrum_channel<"In", double> audio;

// Option B with the raw spectrum ; no window is defined.

struct

{

halp_meta(name, "In 2");

double* channel{};

// complex numbers... using value_type = double[2] is also ok

struct

{

std::complex<double>* bin;

} spectrum{};

} audio_2;

} inputs;

struct

{

halp::val_port<"Peak", double> peak;

halp::val_port<"Band", int> band;

halp::val_port<"Peak 2", double> peak_2;

halp::val_port<"Band 2", int> band_2;

} outputs;

void operator()(int frames)

{

// Process with option A

{

outputs.peak = 0.;

// Compute the band with the highest amplitude

for(int k = 0; k < frames / 2; k++)

{

const double ampl = inputs.audio.spectrum.amplitude[k];

const double phas = inputs.audio.spectrum.phase[k];

const double mag_squared = ampl * ampl + phas * phas;

if(mag_squared > outputs.peak)

{

outputs.peak = mag_squared;

outputs.band = k;

}

}

outputs.peak = std::sqrt(outputs.peak);

}

// Process with option B

{

outputs.peak_2 = 0.;

// Compute the band with the highest amplitude

for(int k = 0; k < frames / 2; k++)

{

const double mag_squared = std::norm(inputs.audio_2.spectrum.bin[k]);

if(mag_squared > outputs.peak_2)

{

outputs.peak_2 = mag_squared;

outputs.band_2 = k;

}

}

outputs.peak_2 = std::sqrt(outputs.peak_2);

}

}

};

}

Messages

Supported bindings: ossia, Max, Pd, Python

So far, we already have something which allows to express a great deal of audio plug-ins, as well as many objects that do not operate in a manner synchronized to a constant sound input, but also in a more asynchronous way, and with things more complicated than single float, int or string values.

A snippet of code is worth ten thousand words: here is how one defines a message input.

struct MyProcessor {

struct messages {

struct {

static consteval auto name() { return "dump"; }

void operator()(MyProcessor& p, double arg1, std::string_view arg2) {

std::cout << arg1 << ";" << arg2 << "\n";

}

} my_message;

};

};

Note that the messages are stored in a structure named messages. It could also be the name of the value, but this would likely use at least a few bytes per instance which would be wasted as messages are not supposed to have states themselves.

Messages are of course only meaningful in environments which support them. One argument messages are equivalent to parameters. If there is more than one argument, not all host systems may be able to handle them ; for instance, it does not make much sense for VST3 plug-ins. On the other hand, programming language bindings or systems such as Max and PureData have no problem with them.

Passing existing functions

The following syntaxes are also possible:

void free_function() { printf("Free function\n"); }

struct MyProcessor {

void my_member(int x);

struct messages {

// Using a pointer-to-member function

struct {

static consteval auto name() { return "member"; }

static consteval auto func() { return &MyProcessor::my_member; }

} member;

// Using a lambda-function

struct

{

static consteval auto name() { return "lambda_function"; }

static consteval auto func() {

return [] { printf("lambda\n"); };

}

} lambda;

// Using a free function

struct

{

static consteval auto name() { return "function"; }

static consteval auto func() { return free_function; }

} freefunc;

};

};

In every case, if one wants access to the processor object, it has to be the first argument of the function (except the non-static-member-function case where it is not necessary as the function already has access to the this pointer by definition).

Type-checking

Messages are type-checked: in the first example above, for instance, PureData will return an error for the message [dump foo bar>. For the message [dump 0.1 bar> things will however work out just fine :-)

Arbitrary inputs

It may be necessary to have messages that accept an arbitrary number of inputs. Here is how:

struct {

static consteval auto name() { return "args"; }

void operator()(MyProcessor& p, std::ranges::input_range auto range) {

for(const std::variant& argument : range) {

// Print the argument whatever the content

// (a library such as fmt can do that directly)

std::visit([](auto& e) { std::cout << e << "\n"; }, argument);

// Try to do something useful with it - here the types depend on what the binding give us. So far only Max and Pd support that so the only possible types are floats, doubles and std::string_view

if(std::get_if<double>(argument)) { ... }

else if(std::get_if<std::string_view>(argument)) { ... }

// ... etc

}

}

} my_variadic_message;

Overloading

Overloading is not supported yet, but there are plans for it.

How does the above code work ?

I think that this case is pretty nice and a good example of how C++ can greatly improve type safety over C APIs: a common problem for instance with Max or Pd is accessing the incorrect member of an union when iterating the arguments to a message.

Avendish has the following method, which transforms a Max or Pd argument list, into an iterable coroutine-based range of std::variant.

using atom_iterator = avnd::generator<std::variant<double, std::string_view>>;

inline atom_iterator make_atom_iterator(int argc, t_atom* argv)

{

for (int i = 0; i < argc; ++i) {

switch (argv[i].a_type) {

case A_FLOAT: {

co_yield argv[i].a_w.w_float;

break;

}

case A_SYM: {

co_yield std::string_view{argv[i].a_w.w_sym->s_name};

break;

}

default:

break;

}

}

}

Here, atom_iterator is what gets passed to my_variadic_message. It allows to deport the iteration of the loop over the arguments into the calling code, but handles the matching from type to union member in a generic way and transforms them into safer std::variant instances on-the-fly, which removes an entire class of possible errors while not costing much : in my experiments for instance, the compiler is able to elide entirely any form of dynamic memory allocation which would normally be required there.

Callbacks

Supported bindings: ossia, Max, Pd, Python

Just like messages allow to define functions that will be called from an outside request, it is also possible to define callbacks: functions that our processor will call, and which will be sent to the outside world.

Just like for messages, this does not really make sense for instance for audio processors ; however it is pretty much necessary to make useful Max or Pd objects.

Callbacks are defined as part of the outputs struct.

Defining a callback with std::function

This is a first possibility, which is pretty simple:

struct {

static consteval auto name() { return "bong"; }

std::function<void(float)> call;

};

The bindings will make sure that a function is present in call, so that our code can call it:

struct MyProcessor {

static consteval auto name() { return "Distortion"; }

struct {

struct {

static consteval auto name() { return "overload"; }

std::function<void(float)> call;

} overload;

} outputs;

float operator()(float input)

{

if(input > 1.0)

outputs.overload.call(input);

return std::tanh(input);

}

};

However, we also want to be able to live without std:: types ; in particular, std::function is a quite complex beast which does type-erasure, potential dynamic memory allocations, and may not be available on all platforms.

Thus, it is also possible to define callbacks with a simple pair of function-pointer & context:

struct {

static consteval auto name() { return "overload"; }

struct {

void (*function)(void*, float);

void* context;

} call;

} overload;

The bindings will fill the function and function pointer, so that one can call them:

float operator()(float input)

{

if(input > 1.0)

{

auto& call = outputs.overload.call;

call.function(call.context, input);

}

return std::tanh(input);

}

Of course, this is fairly verbose: thankfully, helpers are provided to make this as simple as std::function but without the overhead (until std::function_view gets implemented):

struct {

static consteval auto name() { return "overload"; }

halp::basic_callback<void(float)> call;

} overload;

Initialization

Supported bindings: Max, Pd

Some media systems provide a way for objects to be passed initialization arguments.

Avendish supports this with a special "initialize" method. Ultimately, I'd like to be able to simply use C++ constructors for this, but haven't managed to yet.

Here's an example:

struct MyProcessor {

void initialize(float a, std::string_view b)

{

std::cout << a << " ; " << b << std::endl;

}

...

};

Max and Pd will report an error if the object is not initialized correctly, e.g. like this:

[my_processor 1.0 foo] // OK

[my_processor foo 1.0] // Not OK

[my_processor] // Not OK

[my_processor 0 0 0 1 2 3] // Not OK

MIDI I/O

Supported bindings: ossia, vst, vst3, clap

Some media systems may have a concept of MIDI input / output. Note that currently this is only implemented for DAW-ish bindings: ossia, VST3, CLAP... Max and Pd do not support it yet (but if there is a standard for passing MIDI messages between objects there I'd love to hear about it !).

There are a few ways to specify MIDI ports.

Here is how one specifies unsafe MIDI ports:

struct

{

static consteval auto name() { return "MIDI"; }

struct

{

uint8_t bytes[3]{};

int timestamp{}; // relative to the beginning of the tick

}* midi_messages{};

std::size_t size{};

} midi_port;

Or, more clearly:

// the name does not matter

struct midi_message {

uint8_t bytes[3]{};

int timestamp{}; // relative to the beginning of the tick

};

struct

{

static consteval auto name() { return "MIDI"; }

midi_message* midi_messages{};

std::size_t size{};

} midi_port;

Here, Avendish bindings will allocate a large enough buffer to store MIDI messages ; this is mainly to enable writing dynamic-allocation-free backends where such a buffer may be allocated statically.

It is also possible to do this if you don't expect to run your code on Arduinos:

struct

{

// Using a non-fixed size type here will enable MIDI messages > 3 bytes, if for instance your

// processor expects to handle SYSEX messages.

struct msg {

std::vector<uint8_t> bytes;

int64_t timestamp{};

};

std::vector<msg> midi_messages;

} midi_port;

Helpers

The library provides helper types which are a good compromise between these two solutions, as they are based on boost::container::small_vector: for small numbers of MIDI messages, there will be no memory allocation, but pathological cases (an host sending a thousand MIDI messages in a single tick) can still be handled without loosing messages.

The type is very simple:

halp::midi_bus<"In"> midi;

MIDI synth example

This example is a very simple synthesizer. Note that for the sake of simplicity for the implementer, we use two additional libraries:

libremidiprovides an usefulenumof common MIDI messages types.libossiaprovides frequency <-> MIDI note and gain <-> MIDI velocity conversion operations.

#pragma once

#include <halp/audio.hpp>

#include <halp/controls.hpp>

#include <halp/meta.hpp>

#include <halp/midi.hpp>

#include <halp/sample_accurate_controls.hpp>

#include <libremidi/message.hpp>

#include <ossia/network/dataspace/gain.hpp>

#include <ossia/network/dataspace/time.hpp>

#include <ossia/network/dataspace/value_with_unit.hpp>

namespace examples

{

/**

* This example exhibits a simple, monophonic synthesizer.

* It relies on some libossia niceties.

*/

struct Synth

{

halp_meta(name, "My example synth");

halp_meta(c_name, "oscr_Synth")

halp_meta(category, "Demo");

halp_meta(author, "Jean-Michaël Celerier");

halp_meta(description, "A demo synth");

halp_meta(uuid, "93eb0f78-3d97-4273-8a11-3df5714d66dc");

struct

{

/** MIDI input: simply a list of timestamped messages.

* Timestamp are in samples, 0 is the first sample.

*/

halp::midi_bus<"In"> midi;

} inputs;

struct

{

halp::fixed_audio_bus<"Out", double, 2> audio;

} outputs;

struct conf

{

int sample_rate{44100};

} configuration;

void prepare(conf c) { configuration = c; }

int in_flight = 0;

ossia::frequency last_note{};

ossia::linear last_volume{};

double phase = 0.;

/** Simple monophonic synthesizer **/

void operator()(int frames)

{

// 1. Process the MIDI messages. We'll just play the latest note-on

// in a not very sample-accurate way..

for(auto& m : inputs.midi.midi_messages)

{

// Let's ignore channels

switch((libremidi::message_type)(m.bytes[0] & 0xF0))

{

case libremidi::message_type::NOTE_ON:

in_flight++;

// Let's leverage the ossia unit conversion framework (adapted from Jamoma):

// bytes is interpreted as a midi pitch and then converted to frequency.

last_note = ossia::midi_pitch{m.bytes[1]};

// Store the velocity in linear gain

last_volume = ossia::midigain{m.bytes[2]};

break;

case libremidi::message_type::NOTE_OFF:

in_flight--;

break;

default:

break;

}

}

// 2. Quit if we don't have any more note to play

if(in_flight <= 0)

return;

// 3. Output some bleeps

double increment

= ossia::two_pi * last_note.dataspace_value / double(configuration.sample_rate);

auto& out = outputs.audio.samples;

for(int64_t j = 0; j < frames; j++)

{

out[0][j] = last_volume.dataspace_value * std::sin(phase);

out[1][j] = out[0][j];

phase += increment;

}

}

};

}

Image ports

Supported bindings: ossia

Some media systems have the ability to process images. Avendish is not restricted here :-)

Note that this part of the system is still pretty much in flux, in particular with regards of how allocations are supposed to be handled. Any feedback on this is welcome.

First, here is how we define a viable texture type:

struct my_texture

{

enum format { RGBA }; // The only recognized one so far

unsigned char* bytes;

int width;

int height;

bool changed;

};

Then, a texture port:

struct {

rgba_texture texture;

} input;

Note that currently, it is the responsibility of the plug-in author to allocate the texture and set the changed bool for output ports. Input textures come from outside.

Due to the large cost of uploading a texture, changed is used to indicate both to the plug-in author that input textures have been touched,and for the plug-in author to indicate to the external environment that the output has changed and must be re-uploaded to the GPU.

GPU processing

Check the Writing GPU Processors chapter of this book!

Helpers

A few types are provided:

halp::rgba_texturehalp::texture_input<"Name">provides methods to get an RGBA pixel:

auto [r,g,b,a] = tex.get(10, 20);

halp::texture_output<"Name">provides methods to set a RGBA pixel:

tex.set(10, 20, {.r = 10, .g = 100, .b = 34, .a = 255});

tex.set(10, 20, 10, 100, 34, 255);

as well as useful method to initialize and mark the texture ready for upload:

// Call this in the constructor or before processing starts

tex.create(100, 100);

// Call this after making changes to the texture

tex.upload();

Image processor example

This example is a very simple image filter. It takes an input image and downscales & degrades it.

#pragma once

#include <cmath>

#include <algorithm>

#include <halp/audio.hpp>

#include <halp/controls.hpp>

#include <halp/meta.hpp>

#include <halp/sample_accurate_controls.hpp>

#include <halp/texture.hpp>

namespace examples

{

struct TextureFilterExample

{

halp_meta(name, "My example texture filter");

halp_meta(c_name, "oscr_TextureFilterExample")

halp_meta(category, "Demo");

halp_meta(author, "Jean-Michaël Celerier");

halp_meta(description, "Example texture filter");

halp_meta(uuid, "3183d03e-9228-4d50-98e0-e7601dd16a2e");

struct

{

halp::texture_input<"In"> image;

halp::knob_f32<"Gain", halp::range{0., 255., 0.}> gain;

halp::knob_i32<"Downscale", halp::range{1, 32, 8}> downscale;

} inputs;

struct

{

halp::texture_output<"Out"> image;

} outputs;

// Some initialization can be done in the constructor.

TextureFilterExample() noexcept

{

// Allocate some initial data

outputs.image.create(1, 1);

}

void operator()()

{

auto& in_tex = inputs.image.texture;

auto& out_tex = outputs.image.texture;

// Since GPU readbacks are asynchronous: reading textures may take some time and

// thus the data may not be available from the beginning.

if(in_tex.bytes == nullptr)

return;

// Texture hasn't changed since last time, no need to recompute anything

if(!in_tex.changed)

return;

in_tex.changed = false;

const double downscale_factor = inputs.downscale;

const int small_w = in_tex.width / downscale_factor;

const int small_h = in_tex.height / downscale_factor;

// We (dirtily) downscale by a factor of downscale_factor

if(out_tex.width != small_w || out_tex.height != small_h)

outputs.image.create(small_w, small_h);

for(int y = 0; y < small_h; y++)

{

for(int x = 0; x < small_w; x++)

{

// Get a pixel

auto [r, g, b, a] = inputs.image.get(

std::floor(x * downscale_factor), std::floor(y * downscale_factor));

// (Dirtily) Take the luminance and compute its contrast

double contrasted = std::pow((r + g + b) / (3. * 255.), 4.);

// (Dirtily) Posterize

uint8_t col

= std::clamp(uint8_t(contrasted * 8) * (255 / 8.) * inputs.gain, 0., 255.);

// Update the output texture

outputs.image.set(x, y, col, col, col, 255);

}

}

// Call this when the texture changed

outputs.image.upload();

}

};

}

Metadatas

Supported bindings: all

So far the main metadata we saw for our processor is its name:

static consteval auto name() { return "Foo"; }

Or with the helper macro:

halp_meta(name, "Foo")

There are a few more useful metadatas that can be used and which will be used depending on whether the bindings support exposing them. Here is a list in order of importance; it is recommended that strings are used for all of these and that they are filled as much as possible.

name: the pretty name of the object.c_name: a C-identifier-compatible name for the object. This is necessary for instance for systems such as Python, PureData or Max which do not support spaces or special characters in names.uuid: a string such as8a4be4ec-c332-453a-b029-305444ee97a0, generated for instance with theuuidgencommand on Linux, Mac and Windows, or with uuidgenerator.net otherwise. This provides a computer-identifiable unique identifier for your plug-in, to ensure that hosts don't have collisions between plug-ins of the same name and different vendors when reloading them (sadly, on some older APIs this is unavoidable).description: a textual description of the processor.vendor: who distributes the plug-in.product: product name if the plug-in is part of a larger product.version: a version string, ideally convertible to an integer as some older APIs expect integer versions.category: a category for the plug-in. "Audio", "Synth", "Distortion", "Chorus"... there's no standard, but one can check for instance the names used in LV2 or the list mentioned by DISTRHO.copyright:(c) the plug-in authors 2022-xxxxlicense: an SPDX identifier for the license or a link towards a license documenturl: URL for the plug-in if any.email: A contact e-mail if any.manual_url: an url for a user manual if any.support_url: an url for user support, a forum, chat etc. if any.

Supported port types

The supported port types depend on the back-end. There is, however, some flexibility.

Simple ports

Float

Supported bindings: all

struct {

float value;

} my_port;

Double

Supported bindings: all except Max / Pd message processors (they will work in Max / Pd audio processors) as their API expect a pointer to an existing

floatvalue.

struct {

double value;

} my_port;

Int

Supported bindings: Same than double.

struct {

int value;

} my_port;

Bool

Supported bindings: Same than double.

struct {

bool value;

} my_port;

Note that depending on the widget you use, UIs may create a

toggle, a maintainedbuttonor a momentarybang.

String

Supported bindings: ossia, Max, Pd, Python

struct {

std::string value;

} my_port;

Enumerations

Supported bindings: all

Enumerations are interesting. There are multiple ways to implement them.

Mapping a string to a value

Consider the following port:

template<typename T>

using my_pair = std::pair<std::string_view, T>;

struct {

halp_meta(name, "Enum 1");

enum widget { combobox };

struct range {